Part V - The MLOps Toolbox: From Jenkins to Kubeflow

Mapping Your DevOps Tools to Their ML Counterparts

"The best tools are the ones that feel familiar yet powerful enough to solve new challenges." - Kelsey Hightower

Remember when Docker and Kubernetes revolutionized how we deploy applications? Today, we're experiencing a similar transformation with ML tools. Let's explore how your existing DevOps tooling knowledge maps to the MLOps world.

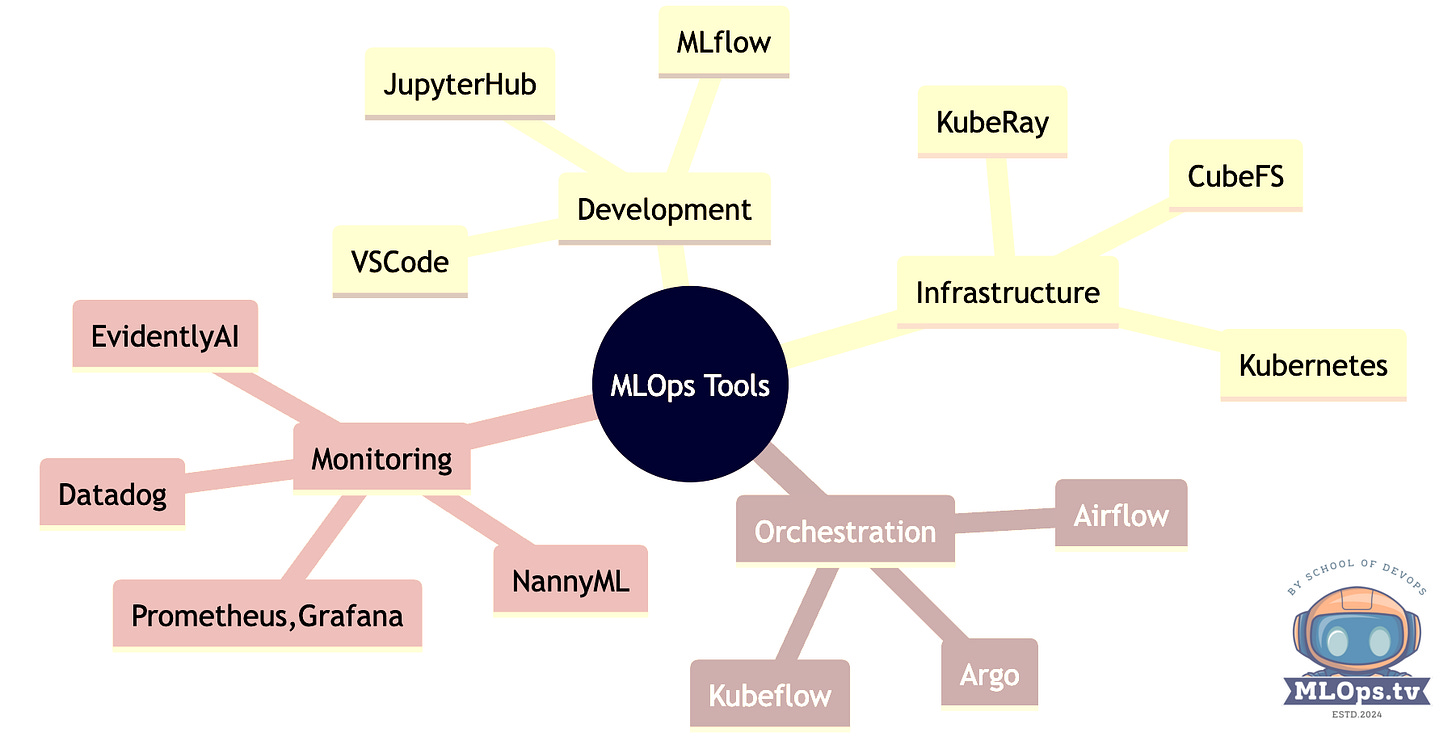

The MLOps Tool Landscape

Tool Categories Explained:

📓 Development: Interactive ML experimentation and coding

🏗️ Infrastructure: Foundation for ML workloads

🔄 Orchestration: Workflow and pipeline management

📈 Monitoring: Tracking both system and model health

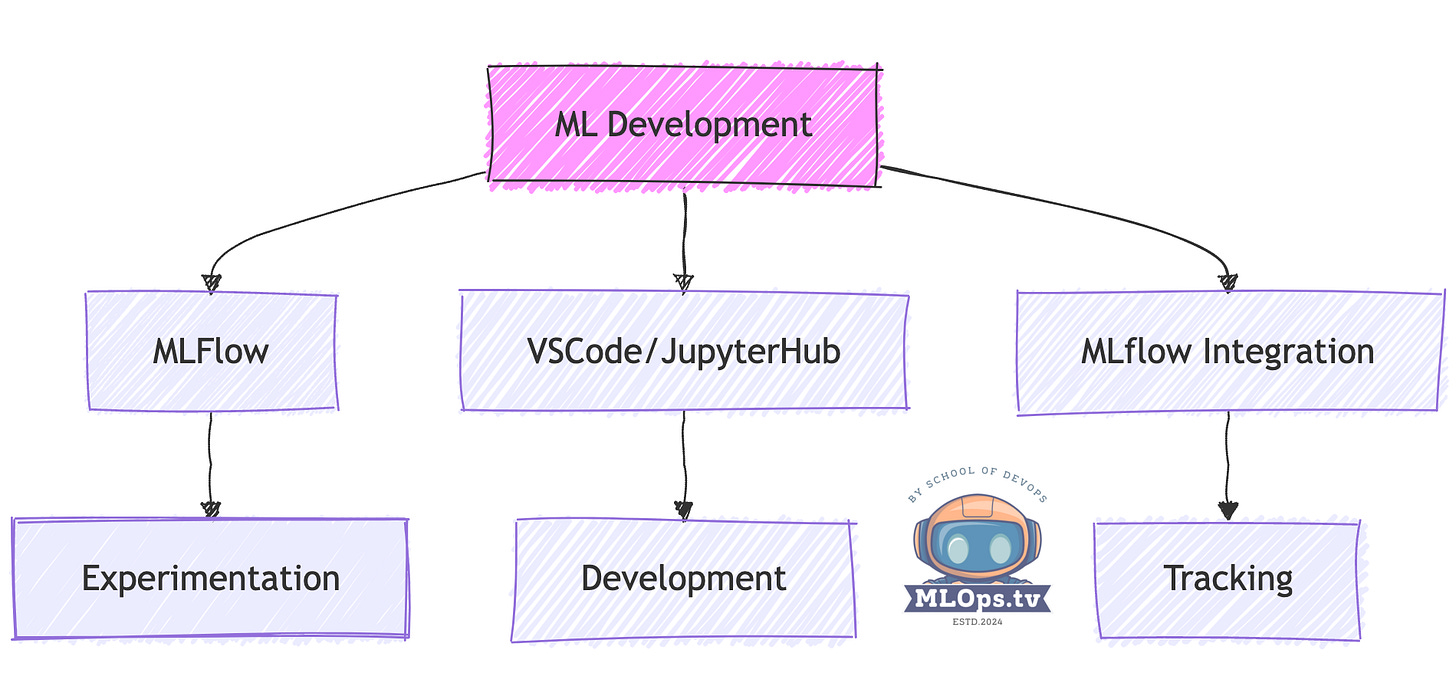

Core Development Environment

Development Tools Explained:

📓 JupyterHub: Multi-user notebooks for experimentation

🔧 VSCode: ML-enhanced development environment

📊 MLflow: Experiment tracking and model management

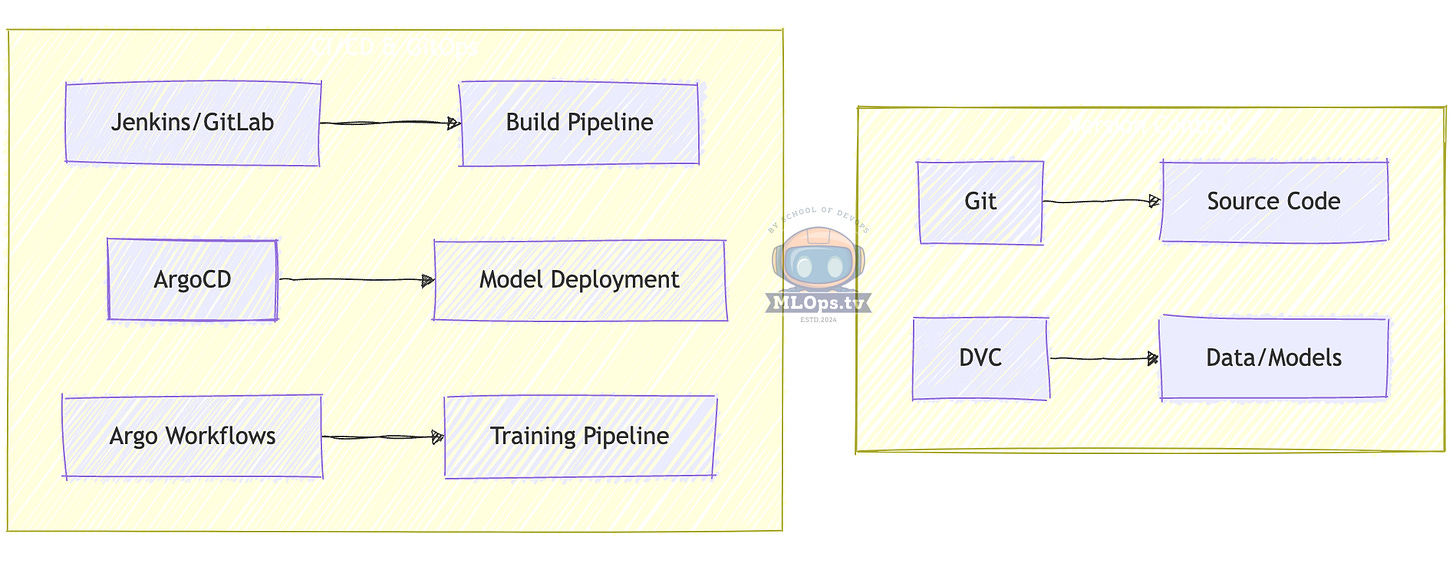

DevOps to MLOps Tool Mapping

1. Version Control and CI/CD

Tools Explained:

📝 Git/DVC: Code and data version control

🔄 Argo Workflows: ML training orchestration

🚀 ArgoCD: GitOps for model deployment

📦 Jenkins/GitLab: Traditional CI/CD support

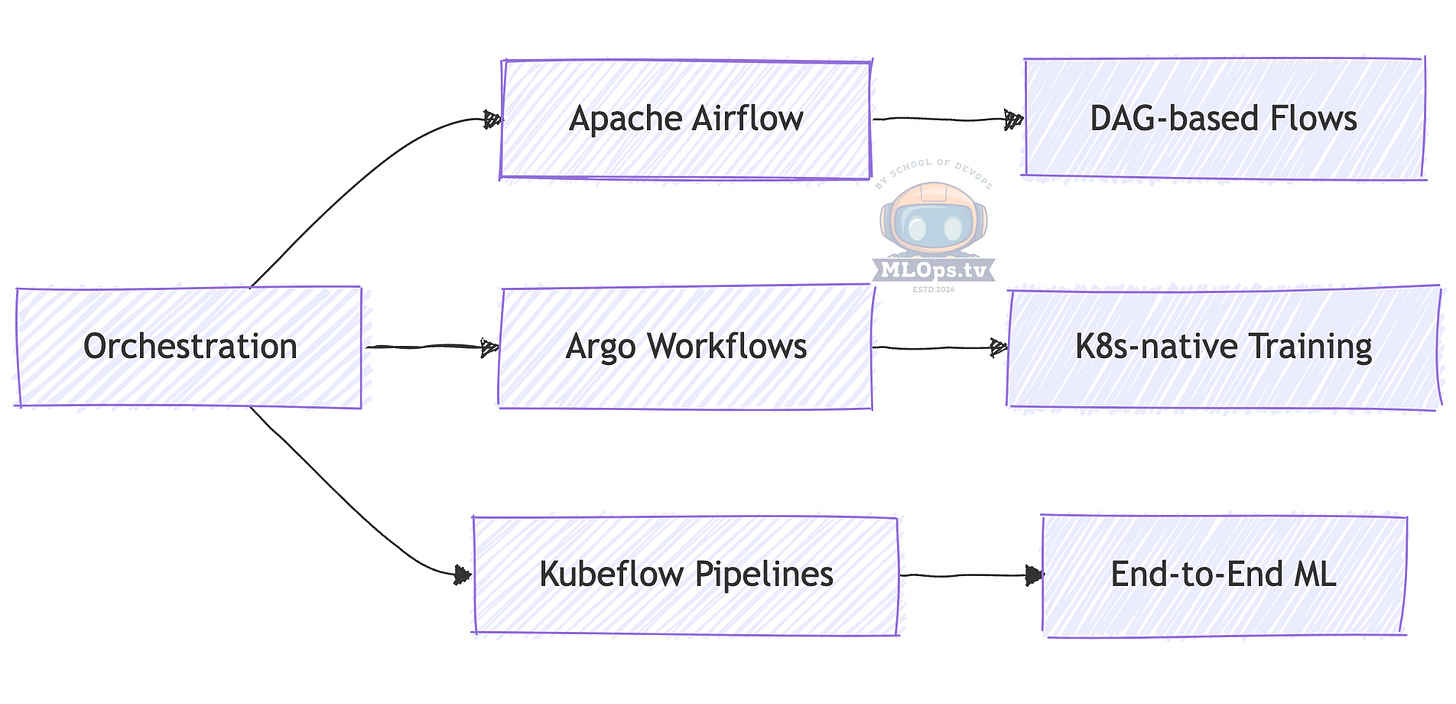

2. Workflow Orchestration

Orchestration Tools Explained:

🌊 Apache Airflow: General-purpose workflow orchestration

⚡ Argo Workflows: Kubernetes-native ML workflows

🚀 Kubeflow Pipelines: End-to-end ML pipelines

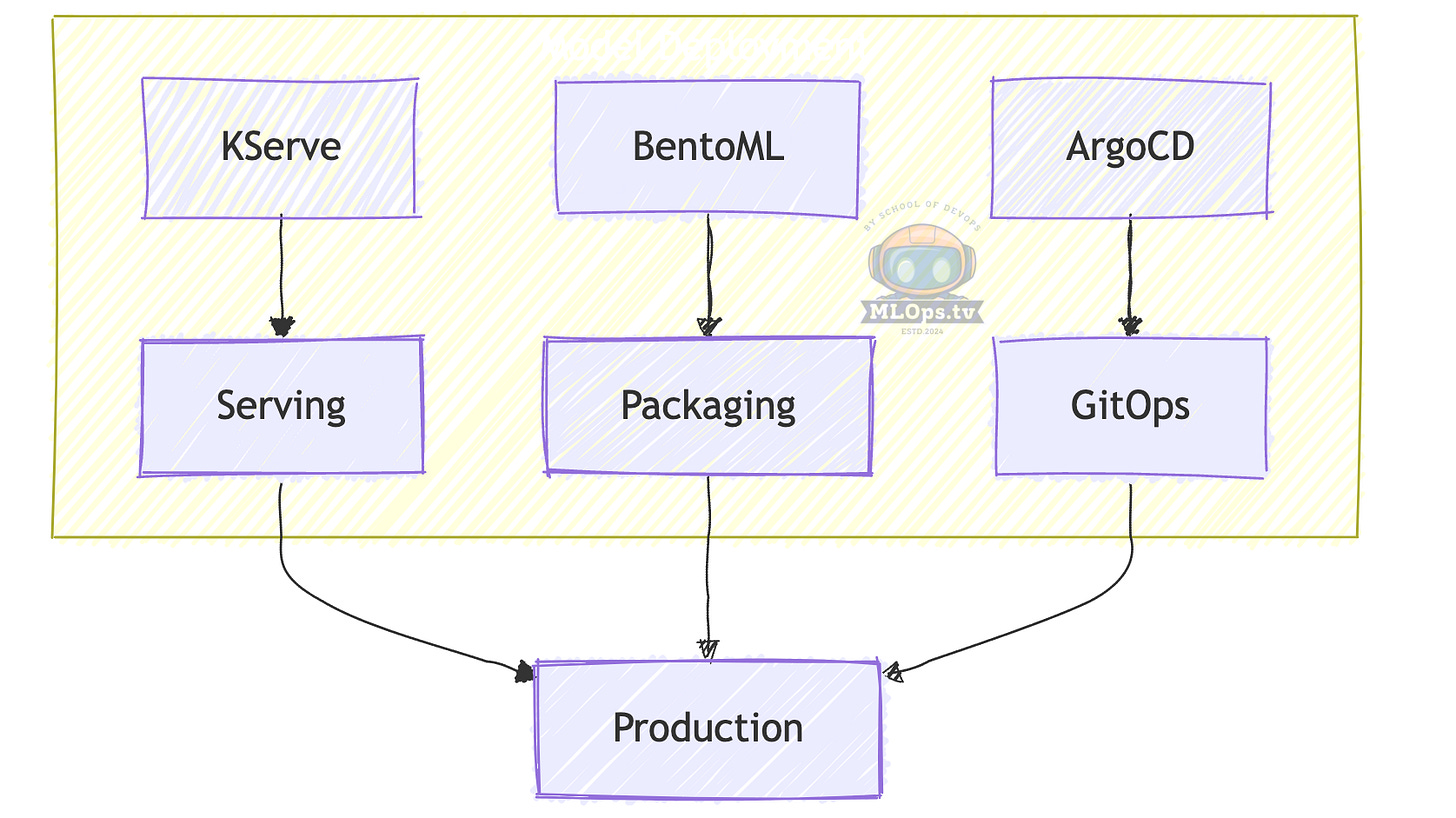

3. Model Serving and Deployment

Deployment Tools Explained:

🎯 KServe: Kubernetes-native model serving

📦 BentoML: Model packaging and serving

🔄 ArgoCD: GitOps-based deployment

🚀 Kubeflow Serving: Integrated serving solution

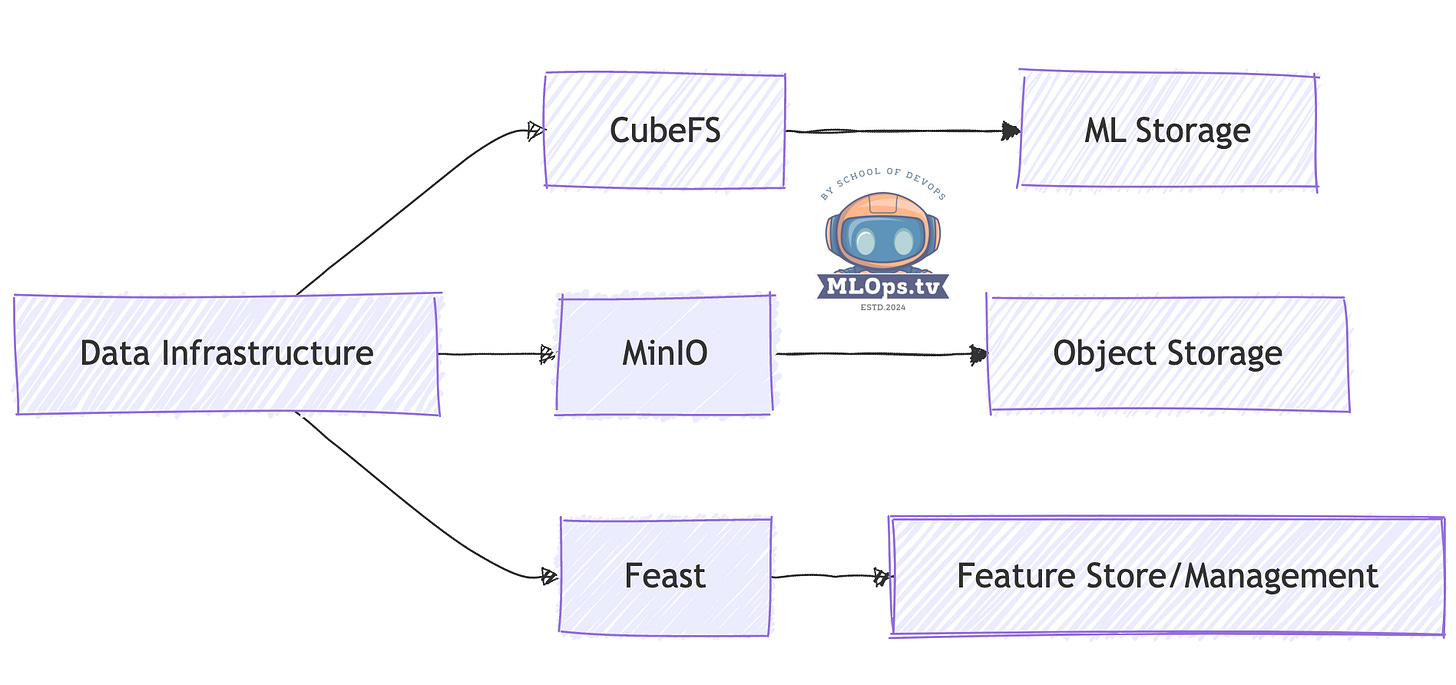

4. Storage and Data Management

Storage Solutions Explained:

💾 CubeFS: Distributed storage for ML workloads

🗄️ MinIO: S3-compatible object storage

📊 Feast: Open source feature store for Production ML

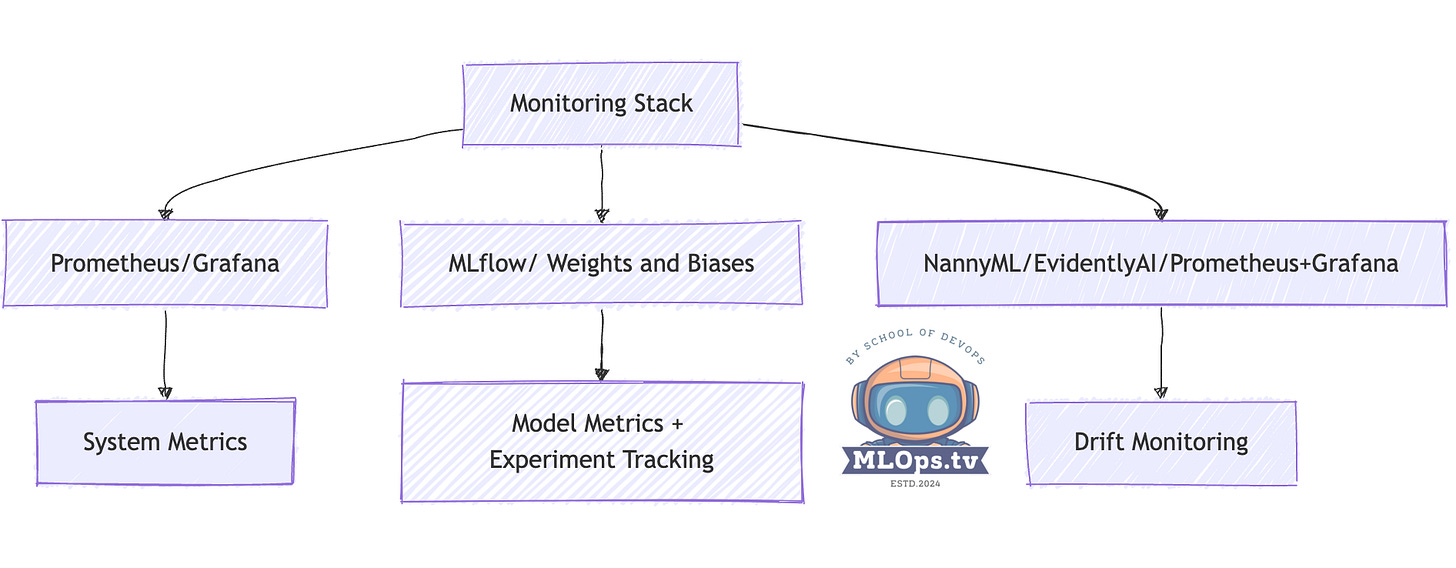

5. Monitoring and Observability

Monitoring Tools Explained:

📈 Prometheus/Grafana: System and resource monitoring

🎯 MLflow / Weights and Biases: Model performance metrics and Experiments Tracking

📊 NannyML/EvidentlyAI/Prometheus+Grafana: Drift Monitoring

What is Drift Monitoring ? It is the process of continuously tracking changes in the data distribution (data drift) or model performance (concept drift) after deployment. It ensures that machine learning models remain accurate and reliable in production by detecting shifts in the underlying data or target behavior over time. Tools like NannyML and EvidentlyAI specialize in identifying such drifts, providing actionable insights to retrain models, update datasets, or adjust deployment strategies, ensuring robust and consistent performance in dynamic real-world environments.

Modern MLOps Stacks

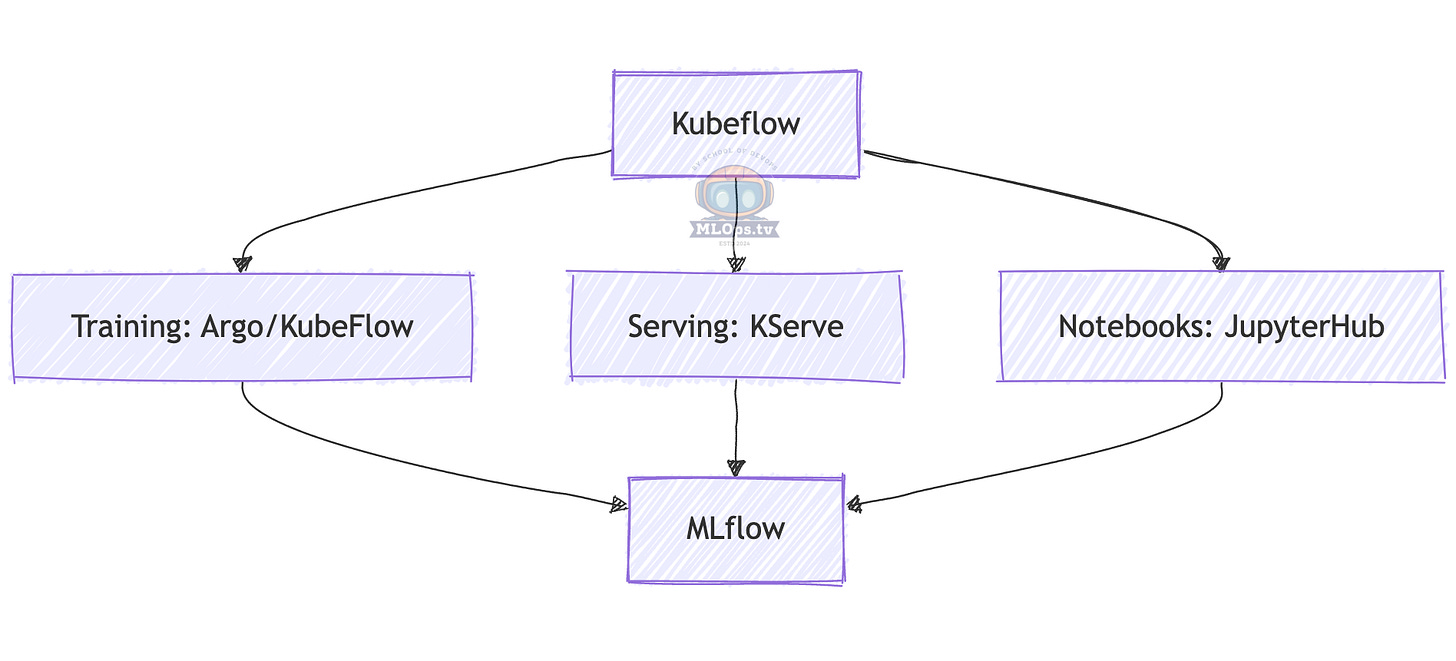

1. Kubernetes-Native Stack

Stack Components Explained:

🎯 Kubeflow: ML workflow orchestration

🚀 KServe: Model serving platform

📓 JupyterHub: Development environment

📊 MLflow: Metrics and model tracking

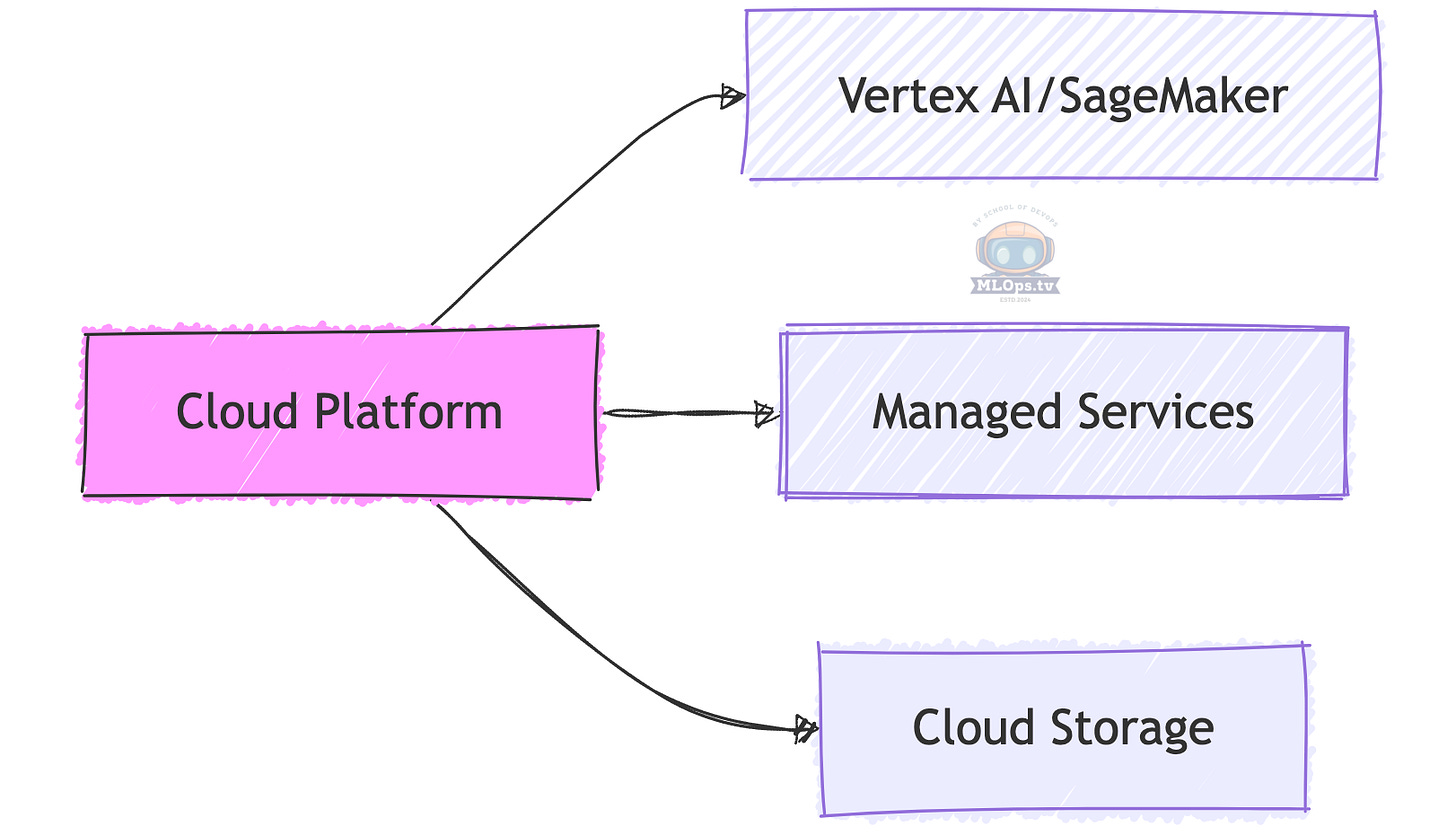

2. Cloud-Native Stack

Cloud Components Explained:

☁️ Managed Platforms: End-to-end ML services

🗃️ Managed Services: Specialized ML tools

💾 Cloud Storage: Scalable data storage

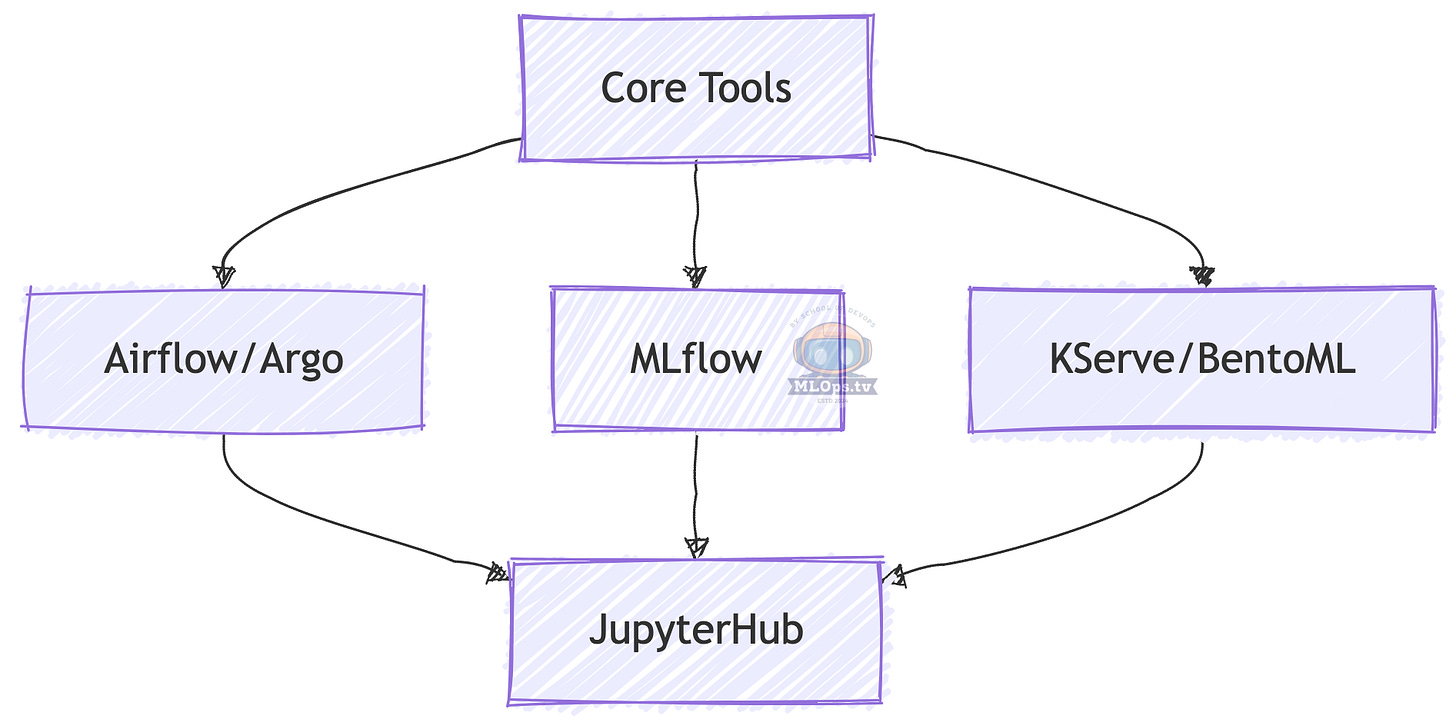

3. Open-Source Stack

Stack Components Explained:

🔄 Airflow/Argo: Workflow management

📊 MLflow: ML lifecycle management

🚀 KServe/BentoML: Model serving

📓 JupyterHub: Development platform

Getting Started: A Practical Approach

Development Environment

📓 Set up JupyterHub for experimentation

🔧 Configure VSCode with ML extensions

📊 Integrate MLflow for tracking

Basic Pipeline

🔄 Implement Argo Workflows or Airflow

📦 Set up model versioning with MLflow

🚀 Deploy models using KServe or BentoML

Advanced Features

⚡ Add feature store

📈 Implement comprehensive monitoring

🔄 Set up GitOps with ArgoCD

"Start with the tools that solve your immediate problems, then expand as your needs grow."

- Eugene Yan

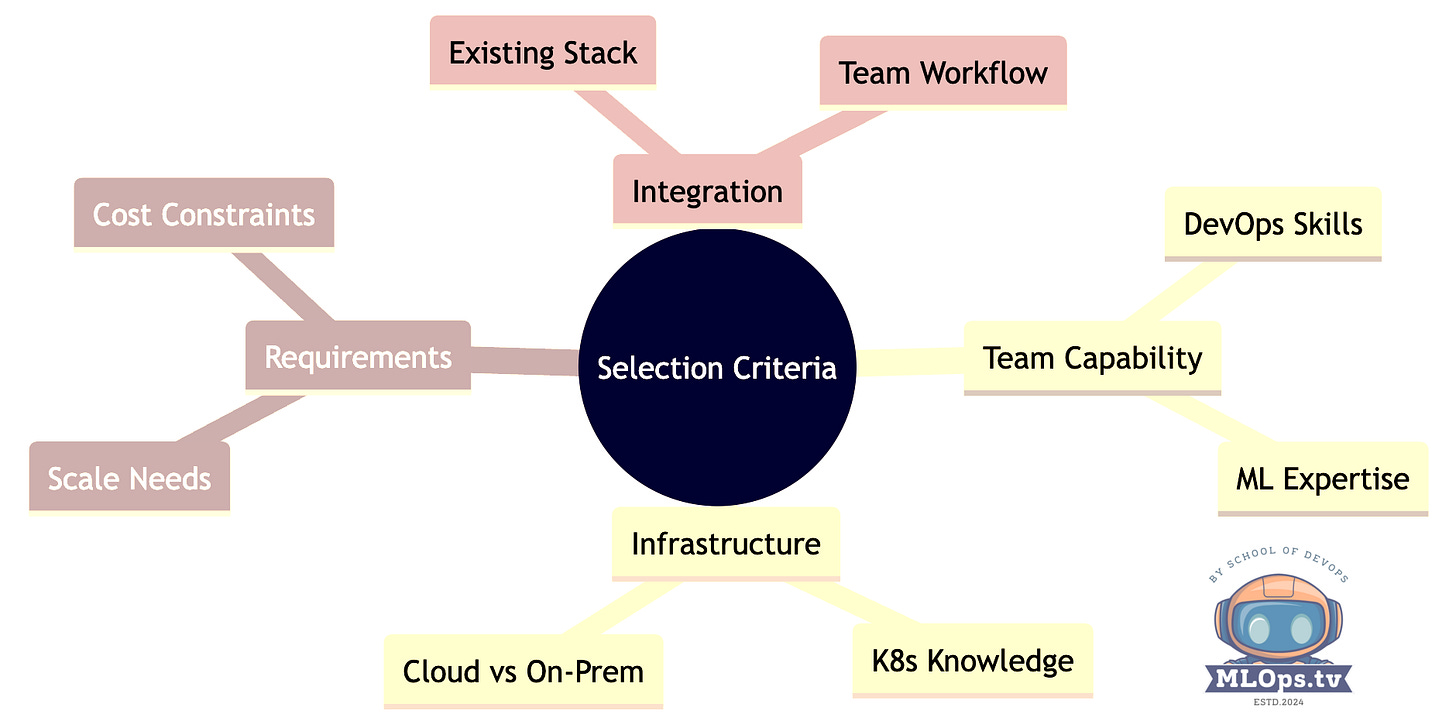

Tool Selection Guide

Selection Factors Explained:

👥 Team Capability: Match tools to team skills

🏗️ Infrastructure: Consider existing platforms

📋 Requirements: Align with business needs

🔄 Integration: Ensure ecosystem compatibility

Key Takeaways

Start Small: Begin with essential tools and expand

Leverage Existing Skills: Use familiar DevOps patterns

Choose Integrated Solutions: Prefer tools that work together

Consider Growth: Plan for scaling and expansion

"The right tools make MLOps feel like a natural extension of DevOps."

- Chip Huyen

What's Next?

In our next article, "LLMOps: Operating in the Age of Large Language Models," we'll explore specialized tools and practices for managing LLM deployments, including vector databases and prompt management systems.

————————

Series Navigation

📚 DevOps to MLOps Roadmap Series

💡 Subscribe to the series to get notified when new articles are published!

Folks, if you are enjoying this series on #MLOps and find this useful, do add in your comment. Also let me know what would you like to learn next so that we could build the content accordingly.