Part IV - MLOps Decoded: DevOps' Cousin in the AI World

Understanding Where DevOps Ends and MLOps Begins

"MLOps isn't about replacing DevOps – it's about extending it to handle the unique challenges of machine learning systems."

- Ville Tuulos, Netflix

Remember when we thought continuous deployment was complex? Well, welcome to the world of continuous learning systems. Today, we'll decode MLOps and its siblings (AIOps and LLMOps), understanding how they build upon our DevOps foundation while solving unique challenges.

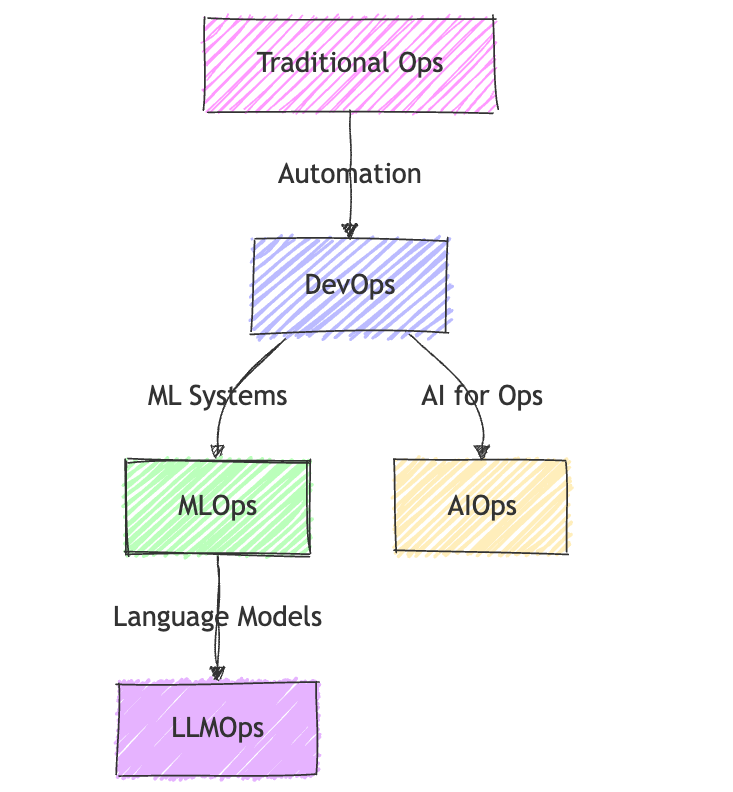

The Ops Family Tree

First, let's clear up the confusion between different "Ops" practices:

Ops Evolution Explained:

🔧 Traditional Ops: Manual system management

🚀 DevOps: Automated software delivery

🤖 MLOps: ML system lifecycle management

🔍 AIOps: AI-powered IT operations

📝 LLMOps: Language model operations

Quick Definitions:

DevOps: Automating and monitoring the software development lifecycle

MLOps: Managing the lifecycle of ML models from development to production

AIOps: Using AI to improve IT operations

LLMOps: Specialized MLOps for Large Language Models

"The key difference isn't in the tools – it's in what you're trying to optimize for."

- David Aronchick, former Kubernetes Product Manager

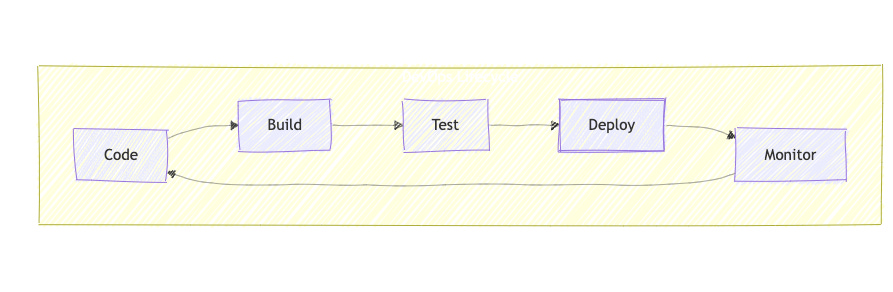

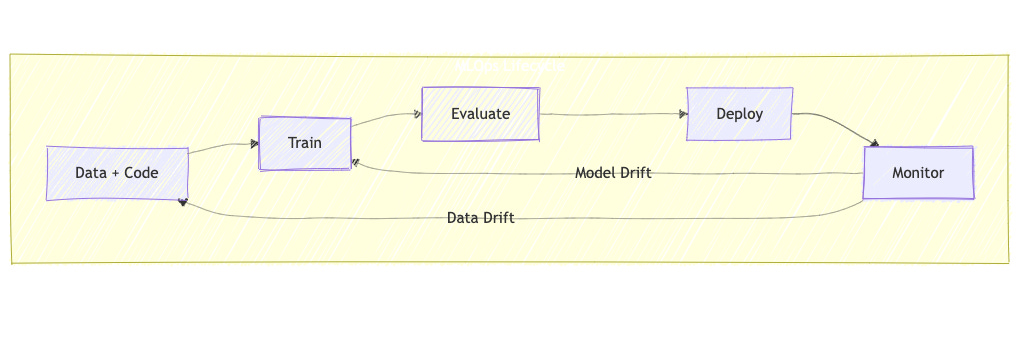

The Evolution from DevOps to MLOps

Let's compare the lifecycles:

Lifecycle Differences Explained:

🔄 DevOps Loop: Predictable, code-driven changes

📊 MLOps Loop: Data and model performance drive updates

⚠️ Key Difference: MLOps has two feedback loops (data and model drift)

Key Differences: DevOps vs MLOps

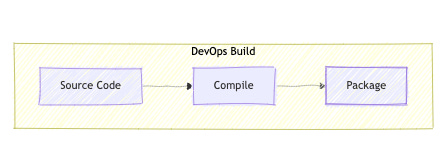

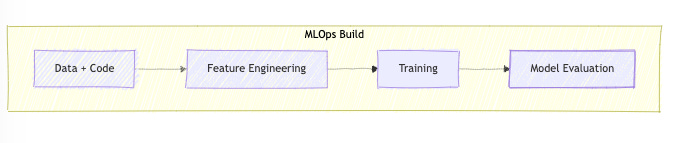

1. The Build Phase

DevOps Build Explained:

📝 Source Code: Your application code in version control

🔨 Compile: Transform source code into executable format

📦 Package: Bundle into deployable artifacts (e.g., containers)

MLOps Build Explained:

🗃️ Data + Code: Both training data and model code are your source materials

⚙️ Feature Engineering: Transform raw data into model-ready format

🧪 Training: Create model using prepared data (like compiling)

📊 Model Evaluation: Validate model performance (unlike traditional builds, this can fail even if code is perfect!)

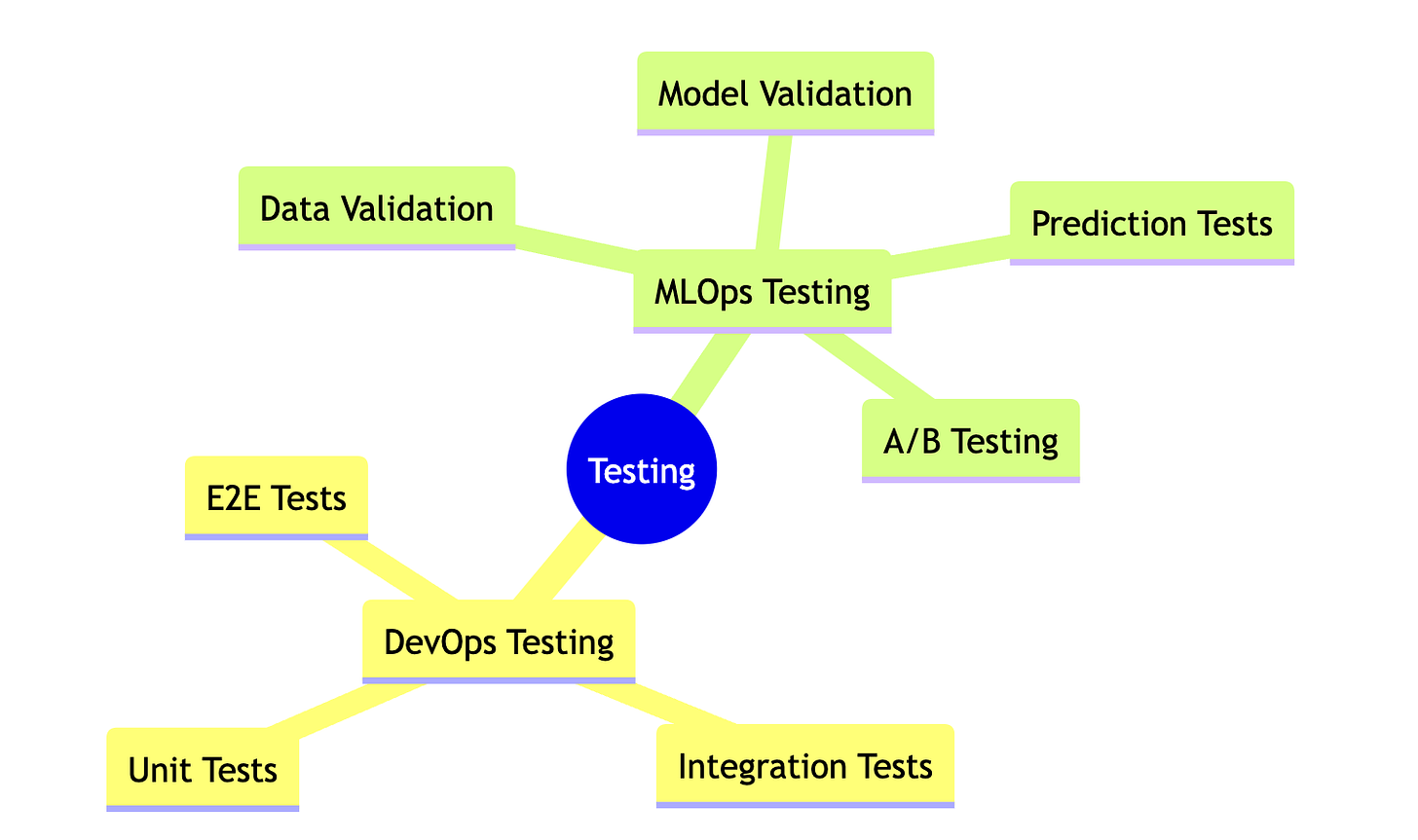

2. Testing Strategies

DevOps Testing Explained:

🔍 Unit Tests: Verify individual code components

🔄 Integration Tests: Check component interactions

🎯 E2E Tests: Validate complete application flow

MLOps Testing Explained:

📊 Data Validation: Verify data quality, distribution, and completeness

🤖 Model Validation: Check model accuracy, bias, and performance

🎯 Prediction Tests: Ensure sensible outputs for known inputs

🔄 A/B Testing: Compare model versions in production

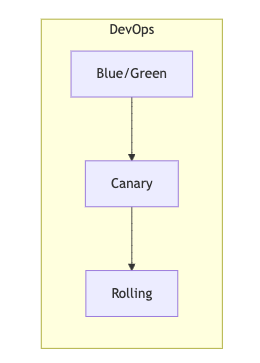

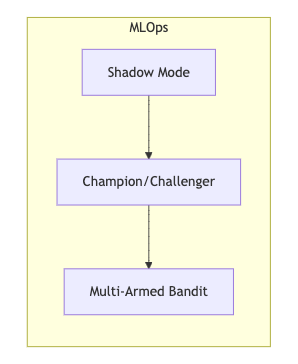

3. Deployment Patterns

DevOps Deployment Explained:

🔄 Blue/Green: Instant switch between two identical environments

🐤 Canary: Gradually route traffic to new version

🌊 Rolling: Update instances one by one

MLOps Deployment Explained:

👥 Shadow Mode: New model runs in parallel, predictions logged but not used

🏆 Champion/Challenger: Current best model vs. new contender

🎰 Multi-Armed Bandit: Automatically route traffic based on model performance

The Unique Challenges of MLOps

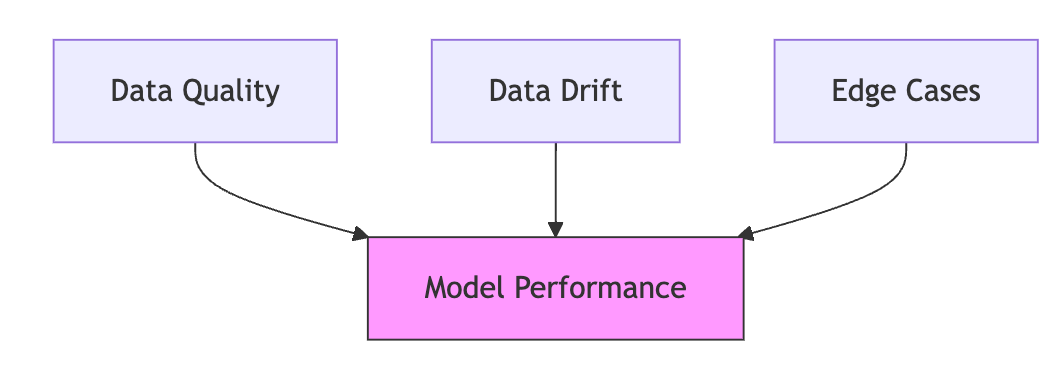

1. Data Dependencies

Key Dependencies Explained:

📊 Data Quality: Garbage in, garbage out - data accuracy directly impacts model output

🔄 Data Drift: When real-world data patterns change over time

⚠️ Edge Cases: Unusual scenarios that can break model performance

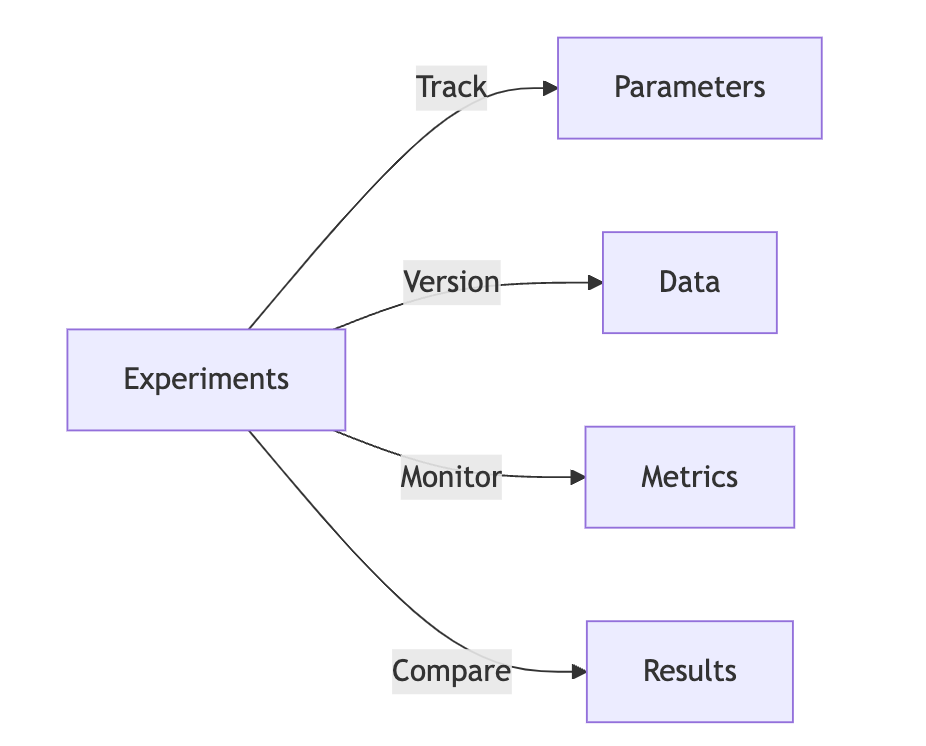

2. Experiment Management

Experiment Components Explained:

⚙️ Parameters: Model configuration settings

📊 Data: Versioned datasets used for training

📈 Metrics: Performance indicators like accuracy

🎯 Results: Outcomes for different experiment runs

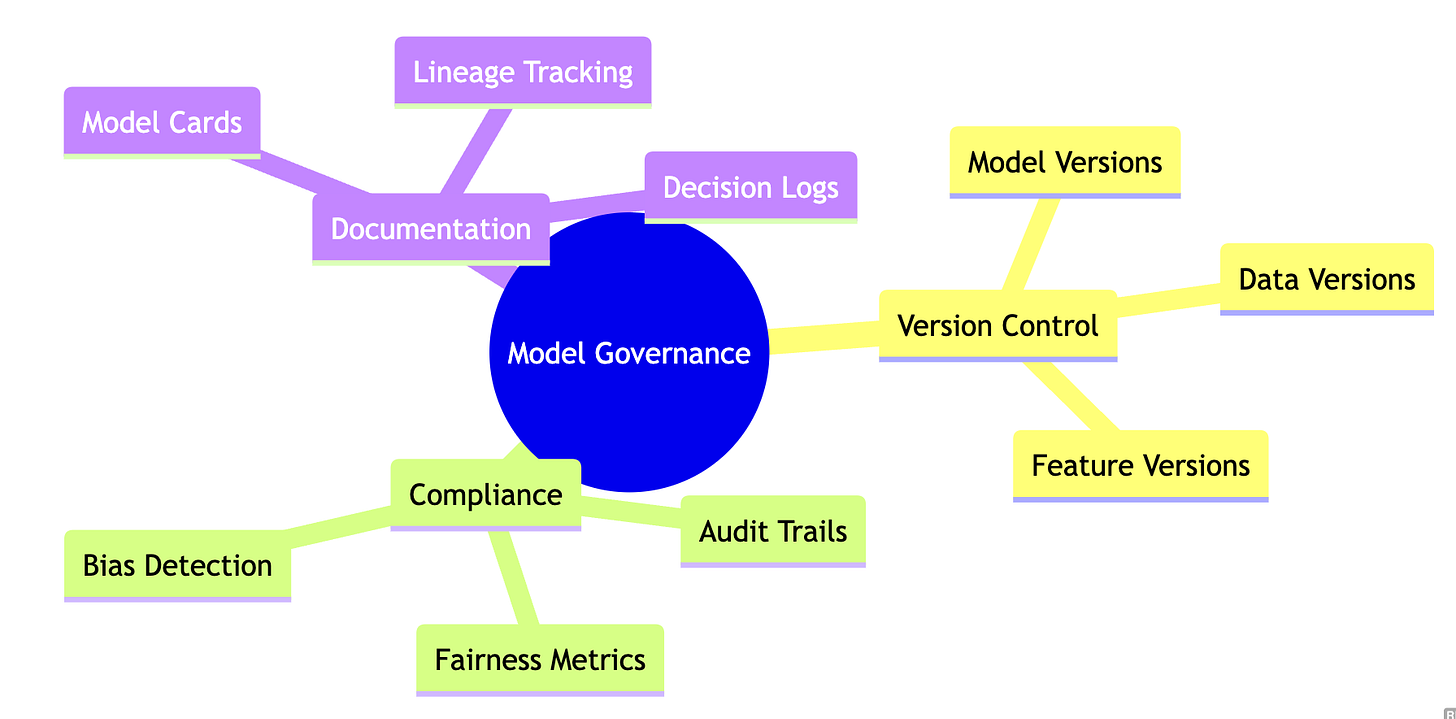

3. Model Governance

Governance Elements Explained:

📚 Version Control: Track all components that influence model behavior

⚖️ Compliance: Ensure model fairness and regulatory compliance

📝 Documentation: Maintain clear records of model decisions and changes

MLOps in Practice: A Day in the Life

Let's compare typical scenarios:

DevOps Day

Monitor application metrics

Deploy new features

Scale infrastructure

Handle incidents

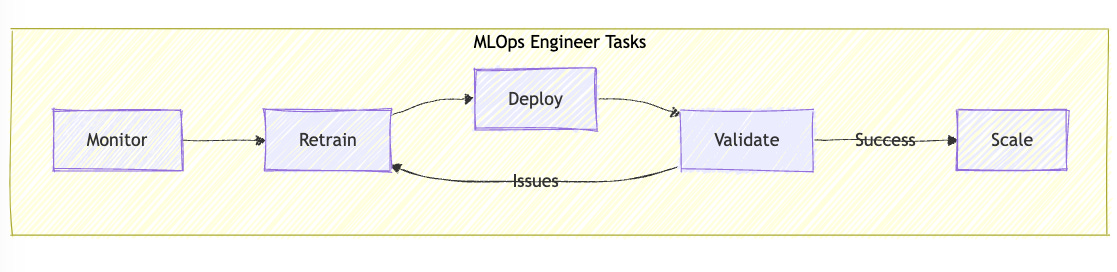

MLOps Day

Monitor model performance AND application metrics

Retrain models with new data

Scale training AND inference infrastructure

Handle data quality AND system incidents

Daily Tasks Explained:

👀 Monitor: Track both system health and model performance

🔄 Retrain: Update models with new data when needed

🚀 Deploy: Roll out new model versions safely

✅ Validate: Ensure model performs well in production

📈 Scale: Adjust resources based on demand

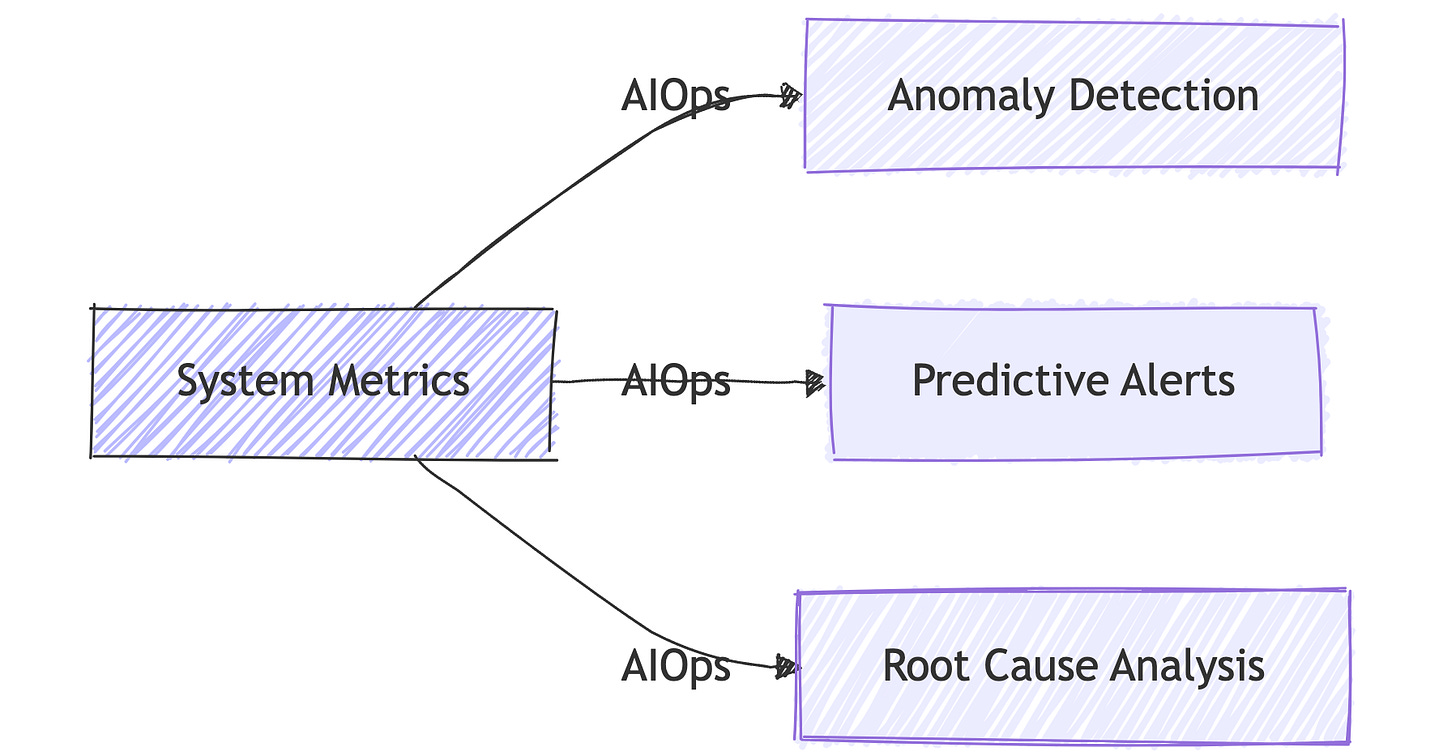

The Role of AIOps

AIOps Functions Explained:

🔍 Anomaly Detection: Automatically spot unusual system behavior

⚡ Predictive Alerts: Warn about potential issues before they occur

🔬 Root Cause Analysis: Quickly identify source of problems

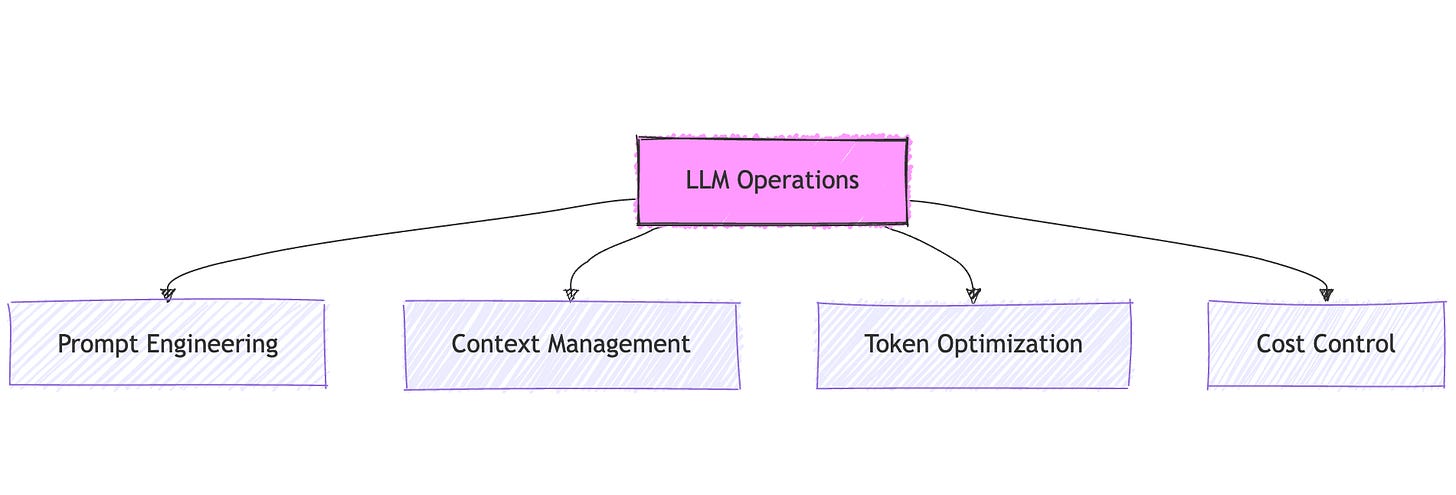

LLMOps: The New Frontier

LLMOps Components Explained:

📝 Prompt Engineering: Design and optimize model inputs

🧩 Context Management: Handle information provided to the model

⚡ Token Optimization: Manage model input/output efficiency

💰 Cost Control: Monitor and optimize API usage costs

Key Takeaways

MLOps Extends DevOps: It's not a replacement, but an extension for ML-specific challenges

Data is Central: Unlike traditional apps, data quality directly affects system performance

Continuous Learning: Systems need to be monitored not just for uptime, but for accuracy

Multiple Feedback Loops: Changes in data, model performance, and system health all matter

"The future belongs to teams that can bridge the gap between development, operations, and data science." - Andrew Ng

What's Next?

Now that you understand what MLOps is (and isn't), we'll dive into the practical tools you'll need in our next article, "The MLOps Toolbox: From Jenkins to Kubeflow." We'll explore how familiar DevOps tools map to their MLOps counterparts.

Series Navigation

📚 DevOps to MLOps Roadmap Series

Series Home: From DevOps to AIOps, MLOps, LLMOps - The DevOps Engineer's Guide to the AI Revolution

Previous: Speaking AI: The DevOps Engineer's Translation Guide

💡 Subscribe to the series to get notified when new articles are published!