Part II - AI in Action: Understanding ML and LLM Applications

Roadmap Series Part II - A DevOps Engineer's Guide to Real-World AI Systems

"If you want to understand something, look at how it's actually used in the wild."

- Kelsey Hightower

Remember when we first started working with microservices? Understanding the architecture became much easier when we looked at real examples like Netflix or Uber. The same applies to AI systems – let's explore how they actually work in production.

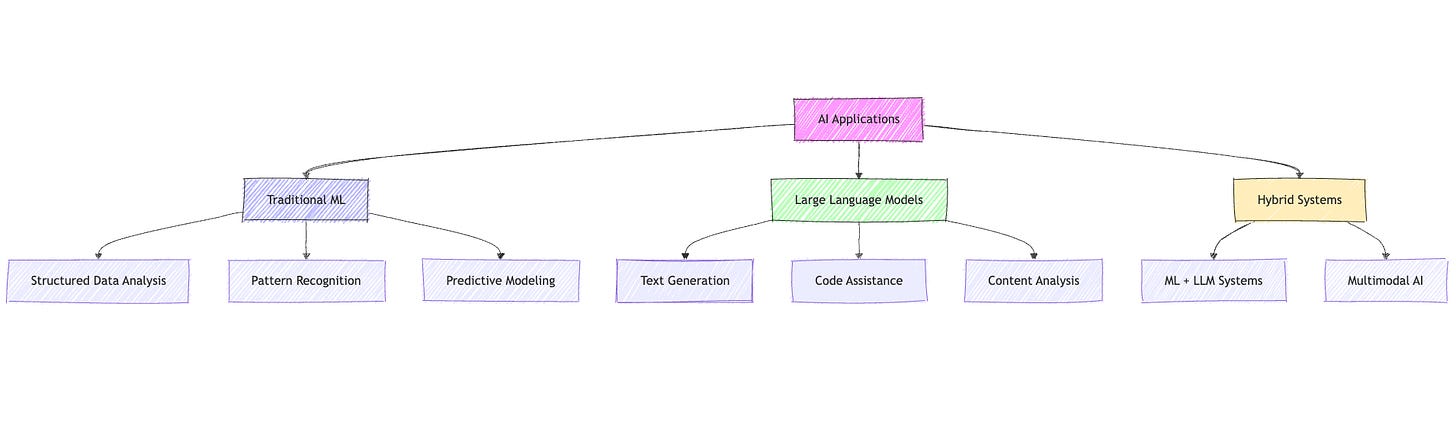

The Three Pillars of AI Applications

Before we dive into specific examples, let's understand the three main categories of AI applications you'll encounter in production:

Traditional ML in Production

Let's start with systems you're probably already supporting without realizing they're ML applications:

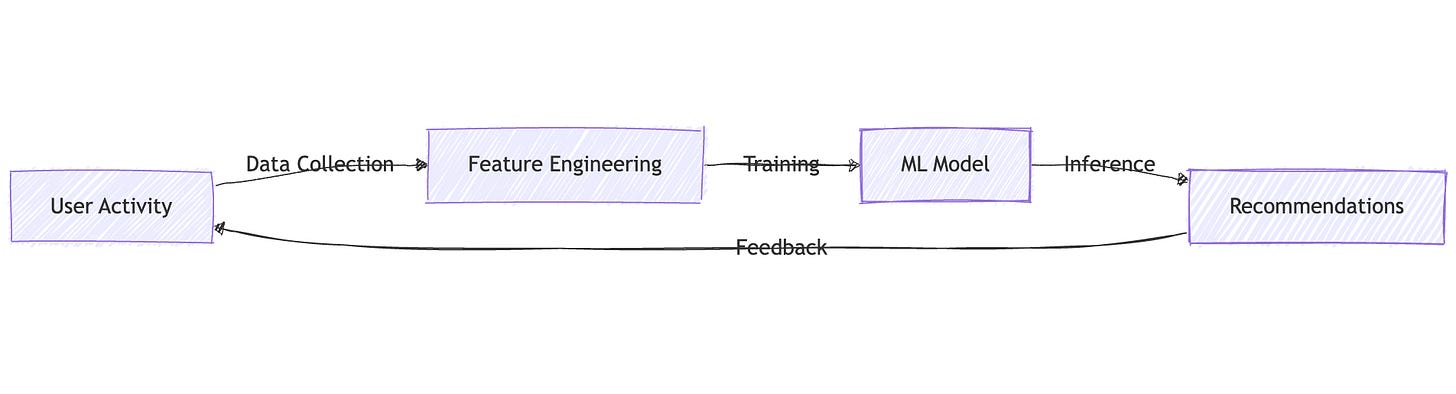

1. Recommendation Engines (e.g., Netflix, Spotify)

What DevOps Sees:

High-throughput data pipelines

Real-time processing requirements

Complex caching strategies

A/B testing infrastructure

"Netflix's recommendation system saves them $1 billion per year in customer retention. It's not just about the algorithms – it's about the infrastructure that makes them reliable." -

Netflix Engineering Blog

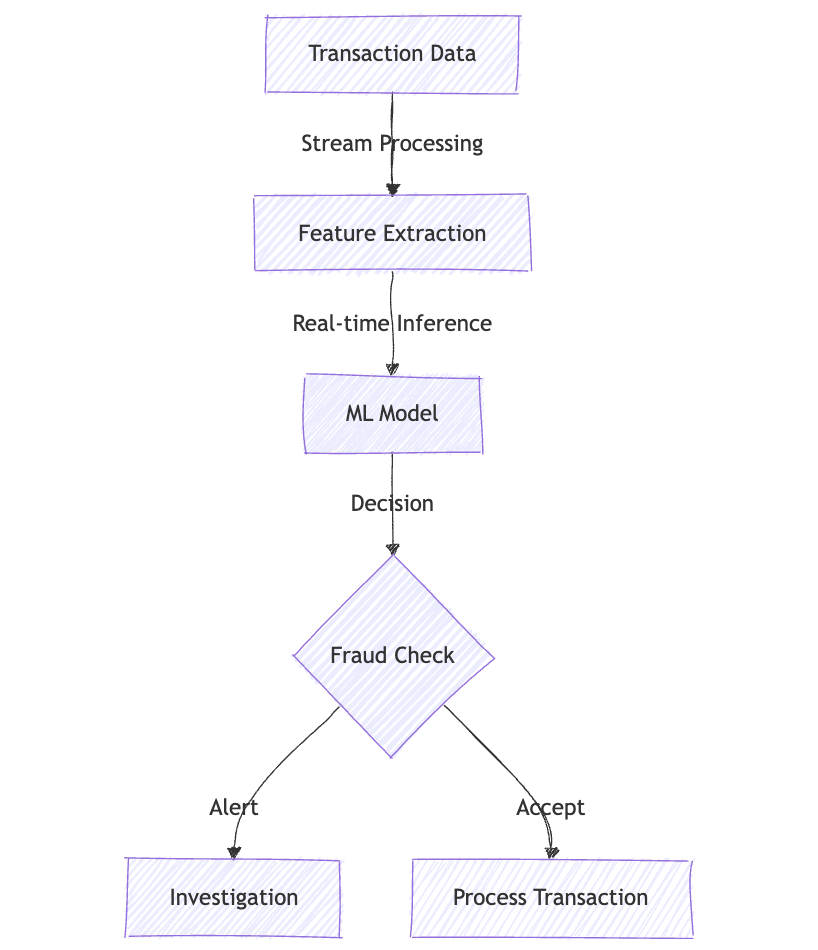

2. Fraud Detection Systems

DevOps Considerations:

Sub-second response requirements

High availability demands

Strict security compliance

Extensive monitoring and logging

Large Language Models in Production

Now let's look at the new kids on the block – LLM applications:

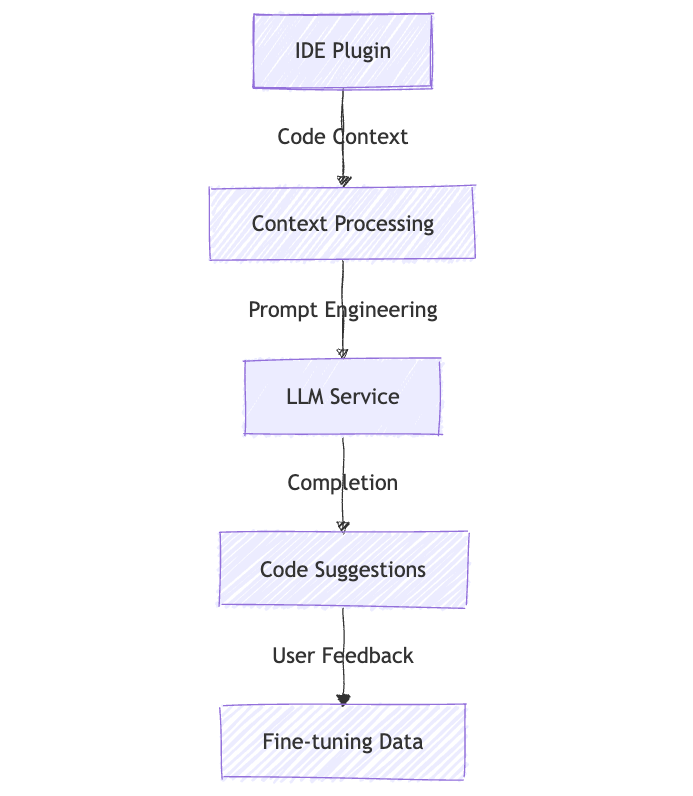

1. Code Assistants (e.g., GitHub Copilot)

Infrastructure Requirements:

GPU clusters for inference

Low-latency response times

Version control for models

Prompt management systems

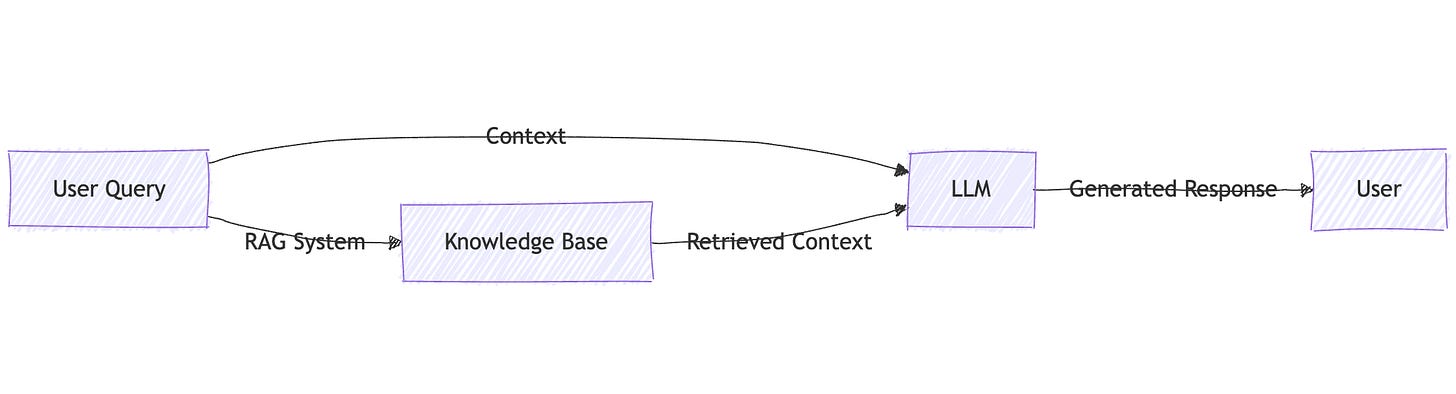

2. Customer Service Chatbots

"The magic of modern chatbots isn't just in the LLM – it's in the carefully orchestrated system of retrieval, generation, and verification."

- Andrej Karpathy

Hybrid Systems: The Best of Both Worlds

Modern AI applications often combine traditional ML and LLMs:

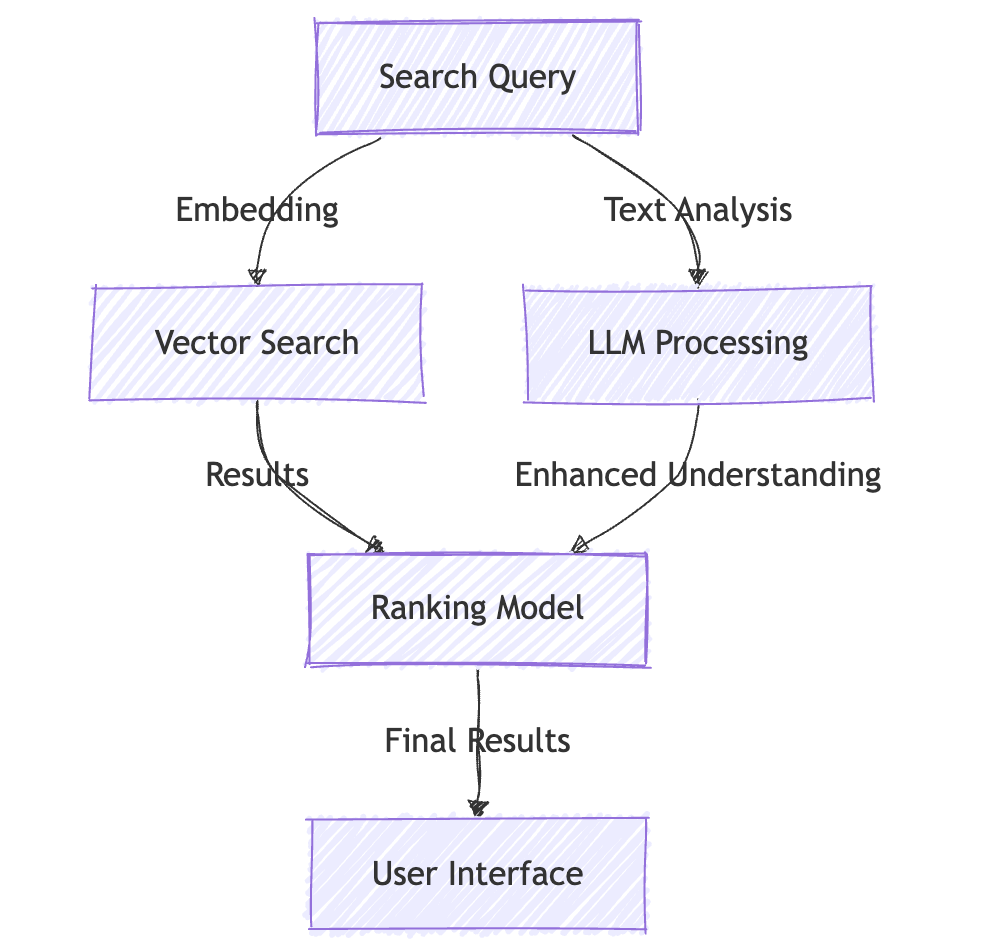

Search Engines (e.g., Modern Enterprise Search)

DevOps Challenges:

Managing vector databases

Scaling embedding services

Orchestrating multiple AI services

Cost optimization

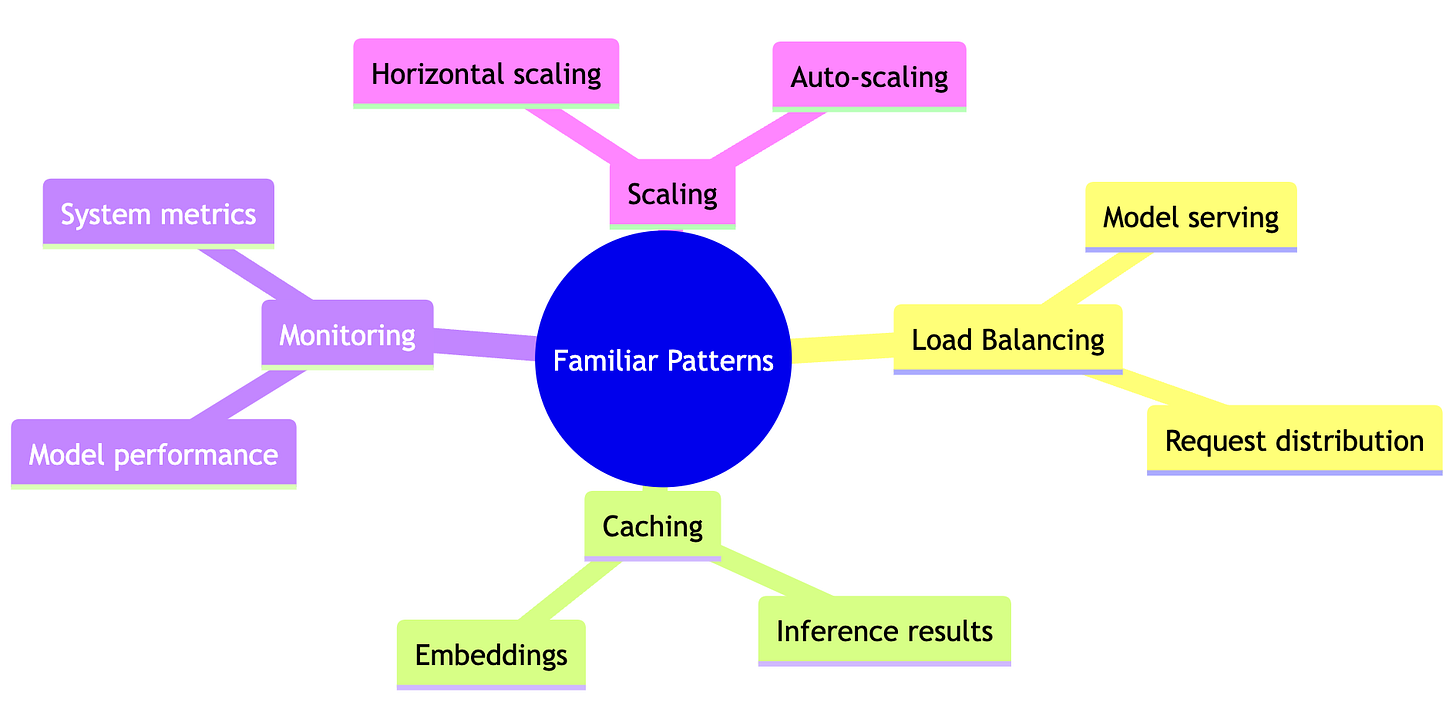

Infrastructure Patterns You Already Know

The good news? Many patterns you're familiar with apply to AI systems:

Key Takeaways for DevOps Engineers

AI Systems Are Still Systems: They need the same care and feeding as any other production service.

Infrastructure Patterns Transfer: Your knowledge of scaling, monitoring, and reliability still applies.

New Components, Same Principles: Vector databases and model servers are just new tools in your toolkit.

"The most reliable AI systems aren't the ones with the fanciest models – they're the ones with rock-solid operations."

- Chip Huyen

What's Next?

Now that you've seen AI systems in action, you might be wondering about all the new terminology you've encountered. Don't worry – in our next article, "Speaking AI: The DevOps Engineer's Translation Guide," we'll decode all this new vocabulary using concepts you already know.

Remember: Every complex AI system you've seen here started as a simple proof of concept. The key is to start small, apply your existing knowledge, and build up from there.

Series Navigation

📚 DevOps to MLOps Roadmap Series

Series Home: From DevOps to AIOps, MLOps, LLMOps - The DevOps Engineer's Guide to the AI Revolution

Previous: The AI Revolution: A DevOps Engineer's Survival Guide

💡 Subscribe to the series to get notified when new articles are published!