The rise of AI, machine learning (ML), and large language models (LLMs) has transformed how we build applications. In 2025, the process of creating these applications involves a wide range of roles, each contributing their expertise to ensure the system is effective, scalable, and reliable. This post explores the key personas involved, their responsibilities, and how the evolving role of MLOps fits into this ecosystem.

Thanks for reading MLOps.tv | School of Devops & AI! Subscribe for free to receive new posts and support my work.

Core Roles in Building AI/ML/LLM Applications

To understand the landscape, let’s break down the essential roles and their responsibilities:

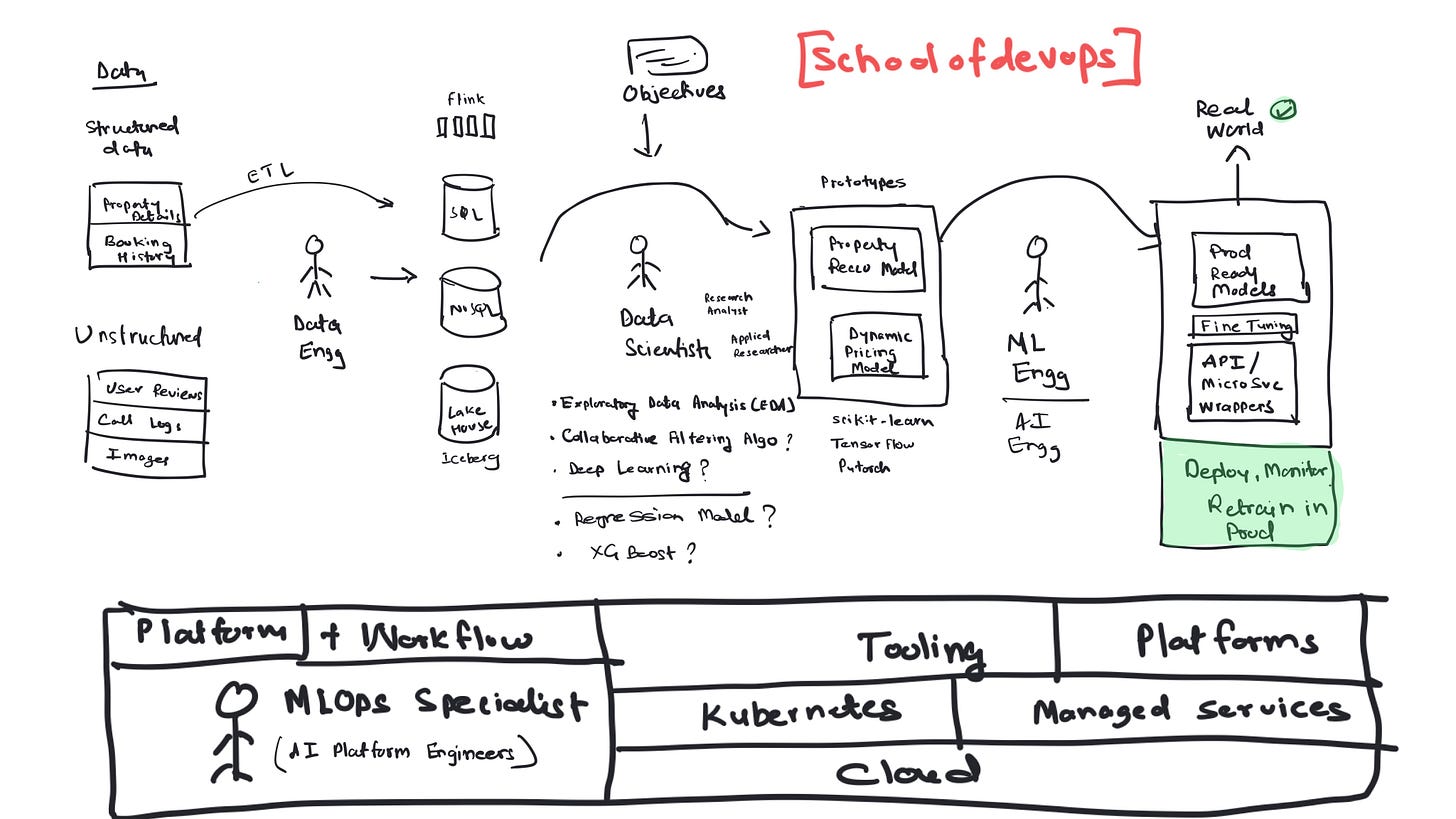

1. Data Engineer

Primary Role: Preparing and managing the data infrastructure.

Data Engineers are responsible for building and maintaining the pipelines that collect, process, and store data used by ML models. Their tasks include:

Designing ETL (Extract, Transform, Load) pipelines.

Ensuring data quality, consistency, and availability.

Integrating data from various sources into a centralized repository, often a data lake or data warehouse.

Collaborating with Data Scientists to provide them with the datasets needed for model training.

Key Skills: SQL, Spark, Kafka, cloud data services (AWS Redshift, Google BigQuery), and Python.

2. Data Scientist

Primary Role: Exploring data and building statistical models.

Data Scientists analyze data to uncover insights and develop initial predictive models. Their work focuses on:

Conducting exploratory data analysis (EDA) to understand patterns and trends.

Developing and validating traditional ML models (e.g., regression, classification).

Experimenting with statistical and predictive modeling techniques.

Creating visualizations to communicate findings to stakeholders.

Key Skills: Python/R, statistics, machine learning libraries (scikit-learn, XGBoost), and tools like Jupyter Notebooks.

3. Machine Learning Engineer (ML Engineer)

Primary Role: Implementing, training, and deploying models.

ML Engineers bridge the gap between research and production. Their responsibilities include:

Implementing machine learning models into production systems.

Optimizing models for performance and scalability.

Building data pipelines for training and inference.

Ensuring model deployment, monitoring, and retraining workflows.

Key Skills: Python, TensorFlow/PyTorch, cloud services, containerization (Docker), and APIs.

4. AI Engineer (Often Merged with ML Engineer)

Primary Role: Designing and deploying AI systems (including LLMs).

In many organizations, the roles of AI Engineer and ML Engineer are combined, as they share overlapping skill sets. AI Engineers focus on building applications that utilize advanced AI technologies, such as LLMs. Responsibilities include:

Fine-tuning pre-trained LLMs for specific tasks.

Designing systems that integrate AI models with other application components.

Working on embedding-based retrieval systems and RAG (retrieval-augmented generation) architectures.

Ensuring inference latency and performance meet user needs.

Key Skills: Transformers, Hugging Face, LangChain, prompt engineering, and neural network optimization.

Note: In startups and smaller teams, ML Engineers often take on AI-specific tasks, including working with LLMs and generative models. Larger organizations may distinguish these roles to allow specialization.

5. MLOps Specialist / AI Platform Engineer

Primary Role: Managing the lifecycle of ML models and building infrastructure for scalable AI systems.

MLOps Specialists or AI Platform Engineers focus on the operationalization of ML workflows. Their responsibilities include:

Develop and automate ML pipelines for model training, testing, deployment, and monitoring

Enable collaboration and version control for code, models, and datasets

Containerize and deploy models using platforms like Docker and Kubernetes

Implement model monitoring, observability, and automated retraining

Optimize infrastructure for cost-efficiency and scalability

Establish model governance frameworks and ensure compliance

Key Skills: Kubernetes, Docker, Terraform, MLflow, cloud platforms, and monitoring tools like Prometheus.

Note: This role is often considered a specialization of DevOps with added ML expertise. In smaller organizations, these responsibilities may overlap with those of ML or AI Engineers.

The Travel Platform Use Case: AI and ML in Harmony

Imagine a travel platform, like Airbnb, that leverages both foundational models and traditional ML to deliver an exceptional user experience. While AI and ML are critical components, they’re just a part of a larger tech ecosystem that includes microservices, databases, APIs, and more. Here’s how the team comes together to create value:

Scenario:

The platform wants to:

Provide personalized property recommendations based on user preferences.

Enable natural language search for seamless bookings.

How Roles Contribute:

Data Engineer: Aggregates structured and unstructured data, including user behavior, property details, and booking history. Ensures this data is accessible and clean for both traditional ML and LLM-based applications.

Data Scientist: Builds a collaborative filtering recommendation model to predict user preferences. Separately, develops a pricing prediction model for dynamic deal optimization.

ML/AI Engineer: Fine-tunes a foundational LLM to handle complex natural language queries like, “Find me a beachfront villa for under ₹10,000 a night with Wi-Fi and pet-friendly options.” They also develop APIs for integrating the LLM with the recommendation system and ensure that the models interact seamlessly with the broader application.

MLOps Specialist: Deploys both the LLM and ML models into production, automating retraining pipelines for the recommendation system and monitoring the LLM’s query performance. They ensure scalability and reliability of the system, optimizing resources to handle surges in user activity.

The Bigger Picture:

While AI and ML enhance personalization and user experience, they are just components of the larger travel tech ecosystem. Microservices handle core functionalities like user authentication, payment processing, and inventory management. Databases store property information, and the tech stack ensures high availability and fault tolerance.

Outcome:

Personalized Recommendations: The collaborative filtering model improves booking rates by matching users with properties they are most likely to book.

Conversational Search: The LLM provides a frictionless search experience, allowing users to express their preferences naturally.

Scalable Infrastructure: MLOps ensures the entire system—from APIs to models—scales seamlessly, even during peak travel seasons.

By combining traditional ML with cutting-edge AI, the platform delivers a superior experience, driving higher engagement and customer satisfaction.

How MLOps Fits Into the Puzzle

MLOps is the enabler that supports the entire ML lifecycle, ensuring reliability and scalability. Here’s how it interacts with other roles:

Collaboration with Data Engineers: MLOps professionals rely on clean, well-structured data pipelines to automate training and retraining workflows.

Enabling Data Scientists: By providing tools for experimentation and version control, MLOps helps Data Scientists focus on model development without worrying about infrastructure.

Supporting ML/AI Engineers: MLOps ensures that models can transition smoothly from development to production, with CI/CD pipelines, logging, and monitoring in place.

A DevOps Specialization: MLOps borrows principles from DevOps (e.g., containerization, IaC) and applies them to the unique challenges of ML systems, often handled by DevOps Engineers who specialize in MLOps.

Key Skills for MLOps Specialists

To excel in MLOps, one needs a mix of DevOps and ML expertise. Essential skills include:

DevOps Fundamentals: Kubernetes, Docker, CI/CD pipelines, infrastructure-as-code tools (Terraform, Ansible).

ML Workflow Management: MLflow, TFX, SageMaker Pipelines.

Monitoring and Observability: Prometheus, Grafana, and tools for tracking model drift.

Evolving Roles: A Blurring Line

In many organizations, the roles of Data Scientists, ML Engineers, and AI Engineers overlap, especially in startups where team sizes are smaller. For example:

A Data Scientist might also deploy models in production.

An ML Engineer might design the data pipelines typically built by Data Engineers.

An AI Engineer might take on MLOps responsibilities to manage LLM deployments.

In larger organizations, these roles are more defined, with specialized teams handling distinct responsibilities.

Conclusion

Building AI/ML/LLM applications in 2025 is a collaborative effort involving multiple personas, each playing a crucial role. MLOps, or AI Platform Engineering, acts as the glue that binds these efforts, ensuring models are reliable, scalable, and impactful. Whether you’re a Data Scientist exploring new algorithms, an ML Engineer deploying models, or an MLOps Specialist optimizing workflows, your contributions are vital to the success of modern AI systems.

By understanding these roles and their interplay, organizations can build cohesive teams that drive innovation and deliver real-world value. For professionals, clarity on these roles can help you carve out your niche and grow your career in this exciting field.

Credits

Special thanks to Rahul Agarwal, MLE at Meta and author of MLWhiz Newsletter (Do check it out for awesome MLE resources) for helping me understand the different Data Science roles (Data Scientists, Research Scientists, Applied Scientist.